In a previous post Big Data: Don't throw the baby out with the bathwater, I made the case that in many instances, we aren't concerned with issues related to causality.

"If a 'big data' ap tells me that someone is spending 14 hours each week

on the treadmill, that might be a useful predictor for their health

status. If all I care about is identifying people based on health status

I think hrs of physical activity would provide useful info. I might

care less if the relationship is causal as long as it is stable....correlations or 'flags' from big data might not 'identify' causal

effects, but they are useful for prediction and might point us in

directions where we can more rigorously investigate causal relationships"

But sometimes we are interested in causal effects. If that is the case, the article that I reference in the previous post makes a salient point:

"But a theory-free analysis of mere correlations is inevitably

fragile. If you have no idea what is behind a correlation, you have no

idea what might cause that correlation to break down."

“Big data” has arrived, but big insights have not. The challenge now

is to solve new problems and gain new answers – without making the same

old statistical mistakes on a grander scale than ever."

I think that may be the instance in many agronomic applications of big data. I've written previously about the convergence of big data, genomics, and agriculture. In those cases, when I think about applications like ACRES or Field Scripts, I have algorithmic approaches (finding patterns and correlations) in mind, not necessarily causation.

But Dan Frieberg points out some very important things to think about when it comes to using agronomic data in an corn and soybean digest article "Data Decisions: Meaningful data analysis involves agronomic common sense, local expertise."

He gives an example where data indicates better yields are associated with faster planting speeds, but something else is really going on:

"Sometimes, a data layer is actually a “surrogate” for another layer that

you may not have captured. Planting speed was a surrogate for the

condition of the planting bed. High soil pH as a surrogate for cyst

nematode. Correlation to slope could be a surrogate for an eroded area

within a soil type or the best part of the field because excess water

escaped in a wet year."

He concludes:

"big data analytics is not the crystal ball that removes local context. Rather, the power of big data analytics is handing the crystal ball to advisors that have local context"

This is definitely a case where we might want to more rigorously look at relationships identified by data mining algorithms that may not capture this kind of local context. It may or may not apply to the seed selection algorithms coming to market these days, but as we think about all the data that can potentially be captured through the internet of things from seed choice, planting speed, depth, temperature, moisture, etc this could become especially important. This might call for a much more personal service including data savvy reps to help agronomists and growers get the most from these big data apps or the data that new devices and software tools can collect and aggregate. Data savvy agronomists will need to know the assumptions and nature of any predictions or analysis, or data captured by these devices and apps to know if surrogate factors like Dan mentions have been appropriately considered. And agronomists, data savvy or not will be key in identifying these kinds of issues. Is there an ap for that? I don't think there is an automated replacement for this kind of expertise, but as economistTyler Cowen says, the ability to interface well with technology and use it to augment human expertise and judgement is the key to success in the new digital age of big data and automation.

References:

Big Data…Big Deal? Maybe, if Used with Caution. http://andrewgelman.com/2014/04/27/big-data-big-deal-maybe-used-caution/

See also: Analytics vs. Causal Inference http://econometricsense.blogspot.com/2014/01/analytics-vs-causal-inference.html

An attempt to make sense of econometrics, biostatistics, machine learning, experimental design, bioinformatics, ....

Saturday, May 31, 2014

Thursday, May 29, 2014

AllAnalytics - Michael Steinhart - Doctors: Time to Unleash Medical Big Data

Examples: "Correlating grocery shopping patterns with incidence of obesity and diabetes

Measuring response rates to cholesterol-lowering drugs by correlating pharmacy refills with exercise data from wearable sensors

Correlating physical distance to hospitals and pharmacies with utilization of healthcare services

Analyzing the influence of social network connections on lifestyle choices and treatment compliance."

Friday, May 2, 2014

Big Data: Don't Throw the Baby Out with the Bathwater

"Data and algorithms alone will not fulfill the promises of “big data.” Instead, it is creative humans who need to think very hard about a problem and the underlying mechanisms that drive those processes. It is this intersection of creative critical thinking coupled with data and algorithms that will ultimately fulfill the promise of “big data.”

From: http://andrewgelman.com/2014/04/27/big-data-big-deal-maybe-used-caution/

I couldn't agree more. I think the above article is interesting because I think on one hand people can get carried away about 'big data' but on the other hand throw the big data baby out with the bath water. Its true, there is no law of large numbers that implies that as n approaches infinity selection bias and unobserved heterogeneity go away. Correlations in large data sets still do not imply causation. But I don't think people that have seriously thought about the promises of 'big data' and predictive analytics believe that anyway. In fact, if we are trying to predict or forecast vs. make causal inferences,selection bias can be our friend. We can still get useful information from an algorithm. If a 'big data' ap tells me that someone is spending 14 hours each week on the treadmill, that might be a useful predictor for their health status. If all I care about is identifying people based on health status I think hrs of physical activity would provide useful info. I might care less if the relationship is causal as long as it is stable. Maybe there are lots of other factors correlated with time at the gym like better food choices, stress management, or even income and geographic and genetic related factors. But in a strictly predictive framework, this kind of 'healtheir people are more likely to go to the gym anyway' selection bias actually improves my predicton without having to have all of the other data involved. The rooster crowing does not cause the sun to come up, but if I'm blindfolded and don't have an alarm clock, hearing the crow might serve as a decent indicator that dawn is approaching. As long as I can reliabley identify healthy people I may not care about the causal connection between hours at the gym and health status, or any of the other variables that may actually be more important in determining health status. It may not be worth the cost of collecting it if I get decent predictions without it. Similarly, if I can get a SNP profile that correlates with some health or disease status, it may tell me very little about what is really going on from a molecular or biochemical or 'causal' standpoint, but the test might be very useful. In both of these cases correlations or 'flags' from big data might not 'identify' causal effects, but they are useful for prediction and might point us in directions where we can more rigorously investigate causal relationships if interested, and 'big data' or having access to more data or richer or novel data never hurts. If causality is the goal, then merge 'big data' from the gym app with biometrics and the SNP profiles and employ some quasi-expermental methodology to investigate causality.

UPDATE: A very insightful and related article by Tim Hartford:

http://timharford.com/2014/04/big-data-are-we-making-a-big-mistake/

"But a theory-free analysis of mere correlations is inevitably fragile. If you have no idea what is behind a correlation, you have no idea what might cause that correlation to break down."

“Big data” has arrived, but big insights have not. The challenge now is to solve new problems and gain new answers – without making the same old statistical mistakes on a grander scale than ever."

It is crucial that if we are interested in causality, we ensure that we are addressing these issues using the appropriate methodology.

See also: http://econometricsense.blogspot.com/2014/01/analytics-vs-causal-inference.html

Monday, April 28, 2014

How is it that Structural Equation Models Subsume Potential Outcomes?

I have been trying to figure out, under what conditions can we identify causal effects via SEMs, particularly, is there a framework similar to the Rubin Causal Model or potential outcomes framework that I can utilize in this attempt? In search of an answer I ran across the following article:

Comments and Controversies

Cloak and DAG: A response to the comments on our comment

Martin A. Lindquist, Michael E. Sobel

Neuroimage. 2013 Aug 1;76:446-9

On potential outcomes notation:

"Personally, we find that using this notation helps us to formulate problems clearly and avoid making mistakes, to understand and develop identification conditions for estimating causal effects, and, very importantly, to discuss whether or not such conditions are plausible or implausible in practice (as above). Though quite intuitive, the notation requires a little getting used to, primarily because it is not typically included in early statistical training, but once that is accomplished, the notation is powerful and simple to use. Finally, as a strictly pragmatic matter, the important papers in the literature on causal inference (see especially papers by the 3R's (Robins, Rosenbaum, Rubin, and selected collaborators)) use this notation, making an understanding of it a prerequisite for any neuroimaging researcher who wants to learn more about this subject."

The notation definitely takes a little time getting use to, and it is also true for me that it was not discussed early on in any of my biostatistics or econometrics courses. However, Angrist and Pischke's Mostly Harmless Econometrics does a good job making it more intuitive, with a little effort. Pearl and Bollen both make arguments that SEMs 'subsume' the potential outcomes framework.While this may be true, its not straightforward to me yet. But I agree it is important to understand how SEMs relate to potential outcomes and causality, or at least understand some framework to support their use in causal inference, as stated in the article:

"Our original note had two aims. First, we wanted neuroimaging researchers to recognize that when they use SEMs to make causal inferences, the validity of the conclusions rest on assumptions above and beyond those required to use an SEM for descriptive or predictive purposes. Unfortunately, these assumptions are rarely made explicit, and in many instances, researchers are not even aware that they are needed. Since these assumptions can have a major impact on the “finndings”, it is critical that researchers be aware of them, and even though they may not be testable, that they think carefully about the science behind their problem and utilize their substantive knowledge to carefully consider, before using an SEM, whether or not these assumptions are plausible in the particular problem under consideration."

I think the assumptions they provide 1-4b seem to lay a foundation in terms that make sense to me from a potential outcomes framework, and the authors hold that these are the assumptions one should think about before using SEMs for causal inference.

Comments and Controversies

Cloak and DAG: A response to the comments on our comment

Martin A. Lindquist, Michael E. Sobel

Neuroimage. 2013 Aug 1;76:446-9

On potential outcomes notation:

"Personally, we find that using this notation helps us to formulate problems clearly and avoid making mistakes, to understand and develop identification conditions for estimating causal effects, and, very importantly, to discuss whether or not such conditions are plausible or implausible in practice (as above). Though quite intuitive, the notation requires a little getting used to, primarily because it is not typically included in early statistical training, but once that is accomplished, the notation is powerful and simple to use. Finally, as a strictly pragmatic matter, the important papers in the literature on causal inference (see especially papers by the 3R's (Robins, Rosenbaum, Rubin, and selected collaborators)) use this notation, making an understanding of it a prerequisite for any neuroimaging researcher who wants to learn more about this subject."

The notation definitely takes a little time getting use to, and it is also true for me that it was not discussed early on in any of my biostatistics or econometrics courses. However, Angrist and Pischke's Mostly Harmless Econometrics does a good job making it more intuitive, with a little effort. Pearl and Bollen both make arguments that SEMs 'subsume' the potential outcomes framework.While this may be true, its not straightforward to me yet. But I agree it is important to understand how SEMs relate to potential outcomes and causality, or at least understand some framework to support their use in causal inference, as stated in the article:

"Our original note had two aims. First, we wanted neuroimaging researchers to recognize that when they use SEMs to make causal inferences, the validity of the conclusions rest on assumptions above and beyond those required to use an SEM for descriptive or predictive purposes. Unfortunately, these assumptions are rarely made explicit, and in many instances, researchers are not even aware that they are needed. Since these assumptions can have a major impact on the “finndings”, it is critical that researchers be aware of them, and even though they may not be testable, that they think carefully about the science behind their problem and utilize their substantive knowledge to carefully consider, before using an SEM, whether or not these assumptions are plausible in the particular problem under consideration."

I think the assumptions they provide 1-4b seem to lay a foundation in terms that make sense to me from a potential outcomes framework, and the authors hold that these are the assumptions one should think about before using SEMs for causal inference.

Monday, April 14, 2014

Perceptions of GMO Foods: A Hypothetical Application of SEM

So how can we best quantify these ‘latent’ constructs or

‘factors’ that may be related to perceptions of biotechnology, and how do

we model these interactions? This

will require a combination of techniques involving factor analysis and

regression, known as structural equation modeling. We might administer a

survey, asking key questions that relate to one’s level of monsantophobia, science knowledge, and political views. To the extend that ‘monsantophobia’

exists and shapes views on biotechnology, it should flavor responses

to questions related to fears, skepticism, and mistrust of ‘big ag.’ Actual

knowledge of science should influence responses to questions related to science etc.

We also may want to quantify the actual flavor of perceptions of GMO food. This

could be some index quantifying levels of tolerance or preferences related

to policies concerning labeling, testing, and regulation or purchasing decisions and expenditures on related goods. To the extent that perceptions are ‘positive’ the index would reflect that on some scale related to answers

to survey questions about these issues. You could also include a set of questions related to policy preferences and try to model the interaction of the above factors and their impact on the support for some policy or the general policy environment.

Suppose we ask a range of questions related to skepticism of

big ag and agrochemical companies and record the responses to each question as

a value for a number of variables (Xm1…Xmn), and did the

same for science knowledge (Xs1…Xsn), political ideology

(Xp1…Xpn), and overall GMO perception (Yp1…Ypn) and policy environment (Ye1…Yen) .

Given the values of these variables will be influenced by the actual latent

constructs we are trying to measure, we refer to the X’s and Y’s above as

‘indicators’ of the given factors for monsantophobia, science, politics, gmo perception, and policy environment. They may also be referred to as the observable manifest variables.

Now, this is not a perfect system of measurement. Given the level of subjectivity among other things, there is likely to be a

non-negligible amount of measurement error involved. How can we deal with measurement error and quantify the

factors? Factor analysis

attempts to separate common variance (associated with the factors) from unique

variance in a data set. Theoretically, the unique variance in FA is correlated

with the measurement error we are concerned about, while the factors remain

‘uncontaminated’ (Dunteman,1989).

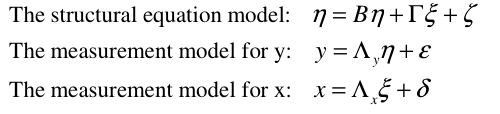

Structural equation modeling (SEM) consists of two models, a

measurement model which consists of deriving the latent constructs or factors

previously discussed, and a structural model, which relates the factors to one

another, and possibly some outcome. In this case, we are relating the factors

related to monsantophobia, science, and political preferences to the outcome,

which in this case would be the latent construct or index related to GMO

perceptions and policy environment. By using the measured ‘factors’ from FA, we can quantify the

latent constructs of monsantophobia, science, politics ,and GMO perceptions

with less measurement error than if we simply included the numeric responses

for the X’s and Y’s in a normal regression. And then SEM lets us identify the relative influence of each

of these factors on GMO perceptions and perhaps even their impact on the general policy environment for biotechnology. This is done in a way similar to regression, by

estimating path coeffceints for the paths connecting the latent constructs or factors as depicted below.

Equations:

.png)

References:

Principle Components Analysis- SAGE Series on

Quantitative Applcations in the

Social Sciences. Dunteman. 1989.

Awareness and Attitudes towards Biotechnology Innovations

among Farmers and Rural Population in the European Union

LUIZA TOMA1, LÍVIA MARIA COSTA MADUREIRA2, CLARE HALL1, ANDREW BARNES1, ALAN RENWICK1

Paper prepared for presentation at the 131st EAAE Seminar ‘Innovation for Agricultural Competitiveness and Sustainability of Rural Areas’, Prague, Czech Republic, September 18-19, 2012

LUIZA TOMA1, LÍVIA MARIA COSTA MADUREIRA2, CLARE HALL1, ANDREW BARNES1, ALAN RENWICK1

Paper prepared for presentation at the 131st EAAE Seminar ‘Innovation for Agricultural Competitiveness and Sustainability of Rural Areas’, Prague, Czech Republic, September 18-19, 2012

A Structural Equation Model of Farmers Operating within

Nitrate Vulnerable Zones (NVZ) in Scotland

Toma, L.1, Barnes, A.1, Willock, J.2, Hall, C.1

12th Congress of the European Association of Agricultural Economists – EAAE 2008

Toma, L.1, Barnes, A.1, Willock, J.2, Hall, C.1

12th Congress of the European Association of Agricultural Economists – EAAE 2008

PLoS One. 2014; 9(1): e86174.

Published online Jan 29, 2014. doi: 10.1371/journal.pone.0086174

PMCID: PMC3906022

Determinants of Public Attitudes to Genetically Modified Salmon

Latifah Amin,1,* Md. Abul Kalam Azad,1,2 Mohd Hanafy Gausmian,3 and Faizah Zulkifli1

Published online Jan 29, 2014. doi: 10.1371/journal.pone.0086174

PMCID: PMC3906022

Determinants of Public Attitudes to Genetically Modified Salmon

Latifah Amin,1,* Md. Abul Kalam Azad,1,2 Mohd Hanafy Gausmian,3 and Faizah Zulkifli1

Sunday, April 13, 2014

Intuition for Fixed Effects

I've written about fixed effects before in the context of mixed models. But how are FE useful in the context of causal inference? What can we learn from a panel data using FE that we can't get from a standard regression with cross sectional data? Let's view this through a sort of parable, based largely on a very good set of notes produced by J. Blumenstock, used in a management statistics course (link).

Suppose we have a restaurant chain and have gathered some cross sectional data on the pricing and consumption of large pizzas for some portion of the day for some period 1 across three cities, as pictured below:

Now, if we are trying to infer the relationship between price and quantity demanded using this data, we notice something odd. The theoretically implied negative relationship does not exist. In fact, if we plot the points, this seems more in line with a supply curve rather than a demand curve:

What's going on that could explain this? One explanation could be specific individual differences across cities related to taste and quality. Perhaps in Chicago, customer's tastes and preferences are for much more expensive and higher quality pizza, and they really like pizza a lot. They may be willing to pay more for more pizzas aligned with their specific tastes and preferences. Perhaps this is also true for San Francisco, but to a lesser extent, and in Atlanta maybe not so much.

What we have is unobserved heterogeneity related to these specific individual effects. How can we account for this? Suppose we instead collected the same data for two periods, essentially creating a panel of data for pizza consumption:

Now, if we look 'within' each city, the data reveals the theoretically implied relationship between price and demand. Take San Francisco for example:

This is essentially what fixed effects estimators using panel data can do. They allow us to exploit the 'within' variation to 'identify' causal relationships. Essentially using a dummy variable in a regression for each city (or group, or type to generalize beyond this example) holds constant or 'fixes' the effects across cities that we can't directly measure or observe. Controlling for these differences removes the 'cross-sectional' variation related to unobserved heterogeneity (like tastes, preferences, other unobserved individual specific effects). The remaining variation, or 'within' variation can then be used to 'identify' the causal relationships we are interested in.

See also: Difference-in-Difference models. These are a special case of fixed effects also used in causal inference.

Reference:

Fixed Effects Models(Very Important Stuff)

www.jblumenstock.com/courses/econ174/FEModels.pdf

Suppose we have a restaurant chain and have gathered some cross sectional data on the pricing and consumption of large pizzas for some portion of the day for some period 1 across three cities, as pictured below:

Now, if we are trying to infer the relationship between price and quantity demanded using this data, we notice something odd. The theoretically implied negative relationship does not exist. In fact, if we plot the points, this seems more in line with a supply curve rather than a demand curve:

What's going on that could explain this? One explanation could be specific individual differences across cities related to taste and quality. Perhaps in Chicago, customer's tastes and preferences are for much more expensive and higher quality pizza, and they really like pizza a lot. They may be willing to pay more for more pizzas aligned with their specific tastes and preferences. Perhaps this is also true for San Francisco, but to a lesser extent, and in Atlanta maybe not so much.

What we have is unobserved heterogeneity related to these specific individual effects. How can we account for this? Suppose we instead collected the same data for two periods, essentially creating a panel of data for pizza consumption:

Now, if we look 'within' each city, the data reveals the theoretically implied relationship between price and demand. Take San Francisco for example:

This is essentially what fixed effects estimators using panel data can do. They allow us to exploit the 'within' variation to 'identify' causal relationships. Essentially using a dummy variable in a regression for each city (or group, or type to generalize beyond this example) holds constant or 'fixes' the effects across cities that we can't directly measure or observe. Controlling for these differences removes the 'cross-sectional' variation related to unobserved heterogeneity (like tastes, preferences, other unobserved individual specific effects). The remaining variation, or 'within' variation can then be used to 'identify' the causal relationships we are interested in.

See also: Difference-in-Difference models. These are a special case of fixed effects also used in causal inference.

Reference:

Fixed Effects Models(Very Important Stuff)

www.jblumenstock.com/courses/econ174/FEModels.pdf

Friday, April 11, 2014

Structural Equation Models, Applied Economics, and Biotechnology

Toma gives some nice descriptions of SEM methodology and application:

Awareness and Attitudes towards Biotechnology Innovations among Farmers and Rural Population in the European Union

LUIZA TOMA1, LÍVIA MARIA COSTA MADUREIRA2, CLARE HALL1, ANDREW BARNES1, ALAN RENWICK1

Paper prepared for presentation at the 131st EAAE Seminar ‘Innovation for Agricultural Competitiveness and Sustainability of Rural Areas’, Prague, Czech Republic, September 18-19, 2012

SEM may consist of two components, namely the measurement model (which states the relationships between the latent variables and their constituent indicators), and the structural model (which designates the causal relationships between the latent variables). The measurement model resembles factor analysis, where latent variables represent ‘shared’ variance, or the degree to which indicators ‘move’ together. The structural model is similar to a system of simultaneous regressions, with the difference that in SEM some variables can be dependent in some equations and independent in others.

A Structural Equation Model of Farmers Operating within Nitrate Vulnerable Zones (NVZ) in Scotland

Toma, L.1, Barnes, A.1, Willock, J.2, Hall, C.1

12th Congress of the European Association of Agricultural Economists – EAAE 2008

To identify the factors determining farmers’ nitrate reducing behaviour, we follow the attitude-behaviour framework as used in most literature on agri- environmental issues. To statistically test the relationships within this framework, we use structural equation modelling (SEM) with latent (unobserved) variables. We first identify the latent variables structuring the model and their constituent indicators. Then, we validate the construction of the latent variables by means of factor analysis and finally, we build and test the structural equation model by assigning the relevant relationships between the different latent variables.

See also:

PLoS One. 2014; 9(1): e86174.

Published online Jan 29, 2014. doi: 10.1371/journal.pone.0086174

PMCID: PMC3906022

Determinants of Public Attitudes to Genetically Modified Salmon

Latifah Amin,1,* Md. Abul Kalam Azad,1,2 Mohd Hanafy Gausmian,3 and Faizah Zulkifli1

Awareness and Attitudes towards Biotechnology Innovations among Farmers and Rural Population in the European Union

LUIZA TOMA1, LÍVIA MARIA COSTA MADUREIRA2, CLARE HALL1, ANDREW BARNES1, ALAN RENWICK1

Paper prepared for presentation at the 131st EAAE Seminar ‘Innovation for Agricultural Competitiveness and Sustainability of Rural Areas’, Prague, Czech Republic, September 18-19, 2012

SEM may consist of two components, namely the measurement model (which states the relationships between the latent variables and their constituent indicators), and the structural model (which designates the causal relationships between the latent variables). The measurement model resembles factor analysis, where latent variables represent ‘shared’ variance, or the degree to which indicators ‘move’ together. The structural model is similar to a system of simultaneous regressions, with the difference that in SEM some variables can be dependent in some equations and independent in others.

A Structural Equation Model of Farmers Operating within Nitrate Vulnerable Zones (NVZ) in Scotland

Toma, L.1, Barnes, A.1, Willock, J.2, Hall, C.1

12th Congress of the European Association of Agricultural Economists – EAAE 2008

To identify the factors determining farmers’ nitrate reducing behaviour, we follow the attitude-behaviour framework as used in most literature on agri- environmental issues. To statistically test the relationships within this framework, we use structural equation modelling (SEM) with latent (unobserved) variables. We first identify the latent variables structuring the model and their constituent indicators. Then, we validate the construction of the latent variables by means of factor analysis and finally, we build and test the structural equation model by assigning the relevant relationships between the different latent variables.

See also:

PLoS One. 2014; 9(1): e86174.

Published online Jan 29, 2014. doi: 10.1371/journal.pone.0086174

PMCID: PMC3906022

Determinants of Public Attitudes to Genetically Modified Salmon

Latifah Amin,1,* Md. Abul Kalam Azad,1,2 Mohd Hanafy Gausmian,3 and Faizah Zulkifli1

Subscribe to:

Posts (Atom)